Azure DataBricks Architecture Part-2 (DataBricks Cluster)

Introduction:

In this Blog post we

are going to discuss about DataBricks cluster type and creation options that

need to be selected when creating DataBricks cluster.

Type of DataBricks Cluster:

Mainly we can

divide it into two types.

·

All-purpose

Cluster

·

Job

Cluster

Difference between Them:

|

All-purpose Cluster |

Job Cluster |

|

Created Manually |

Created By JOB |

|

Persisted (Can

Terminated Manually) |

Non Persisted (Terminated when Job

Ends) |

|

Suitable for

Interactive Workload |

Suitable for

Automated Work Load |

|

Shared among many

users |

Isolated Just for JOB |

|

Expensive to run |

Cheaper to run |

Note: We cannot

Create Job cluster. It is automatically created when Job runs.

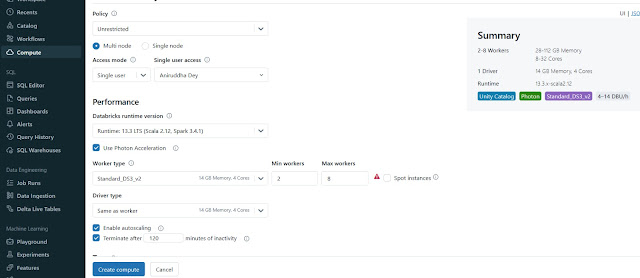

Cluster Configuration option Details:

We need to select

those options when creating cluster.

·

Single/Multi Node:

In

Single node there is only one node. There is no worker node present. It is not suitable

for large ETL. In multi node, there are one master node and multiple worker nodes.

It is generally used to heavy load balancing.

·

Access Mode:

a. Single User:

Only one user can access it. It is supported by the Python, SQL, Scala and R.

b. Shared:

Multiple users can access. Only available in the Premium and supported by Python

and SQL. It provides process isolation. One process cannot see the other

process, data and credentials.

c. No Isolation Shared:

Multiuser access. Support Python, SQL, Scala and R. It is supported by Standard and Premium

version. It is not providing any process isolation. Failing of a process can affect others. It is less secure. One process may use all the resources.

DataBricks Runtime:

It is the library

that runs on DataBricks cluster. There are 4 library mentioned bellow.

·

DataBricks Runtime:

Support optimized

version of spark. Support scala, java, Python and R. It supports ubuntu libraries,

GPU libraries, Delta Lake and other DataBricks services.

·

DtaBricks Runtime ML:

All the libraries form DataBricks runtime with popular ML libraries such as

PyTorch, Keras. TensorFlow, XG Boost etc.

·

Photon Runtime:

Support everything

from DataBricks runtime and Photon engine.

·

DataBricks runtime light:

Runtime option for

only Jobs not requiring advance feature.

Auto Termination:

It reduce unnecessary

cost of ideal cluster. We can terminate the cluster when it is not use after

specified minutes. Default value for single node and standard cluster is 120

minutes. We can specify the value from 10 to 10000 minutes as duration.

Auto Scaling:

Can automatically add

and remove cluster. We can specify minimum and maximum nodes. Auto scaling

between min and max node based on workload. It is not recommended for

streaming workload.

Cluster VM Type / Size:

·

Memory

optimized.

·

Compute

optimized

·

Storage

optimized

·

General

purpose

·

CPU

Accelerated

Cluster Policy:

Admin user can create

cluster policy with restriction and assigned it to user and groups.

Comments

Post a Comment