Working with Spark – Big Data Hadoop MapReduce

Introduction

Before moving to Spark RDD concept, which

is the base line of Spark, we need to understand the concept of Hadoop Mapredue.

RDD is the improvement of Hadoop Mapreduce to get 100 outputs in memory. In

this blog post we are going to discuss about Hadoop Mapredue to understand the

concept only.

Hope it will be interesting.

What

is MapReduce

MapReduce is a software framework and

programming module to handle huge data. Hadoop is capable to run MapReduce

programs which are written in different language like Java, Ruby, Python and

C++.

How

MapReduce Works

As

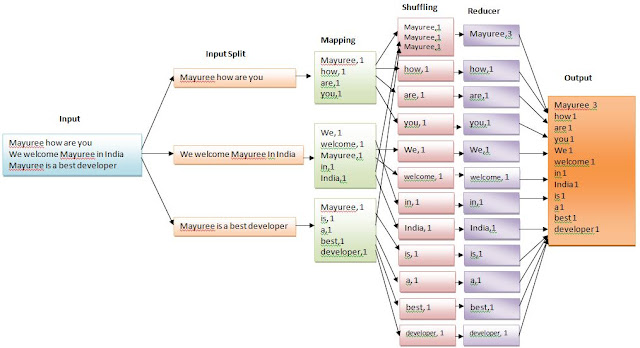

the name specified, MapReduce is a combination of Mapping and Reducing.

We

can divide the MapReduc into following Sections.

·

Input Splits

·

Mapping

·

Shuffling

·

Reducing

Before

examining those, let’s take an example.

Please

consider the following input data as Map Reduce program.

Mayuree how are you

We welcome Mayuree in India

Mayaure is a best developer

Please

follow the bellow diagram.

Final

Output:

Input Splits

An input to a MapReduce job is divided into fixed-size pieces called input splits Input split is a chunk of the input that is consumed by a single map

Mapping

This is the very first phase in the execution of map-reduce program. In this phase data in each split is passed to a mapping function to produce output values.

Shuffling

This phase consumes the output of Mapping phase. Its task is to consolidate the relevant records from Mapping phase output.

Reducing

In this phase, output values from the Shuffling phase are aggregated. This phase combines values from Shuffling phase and returns a single output value. In short, this phase summarizes the complete datasets.

Hope

you like it.

Comments

Post a Comment