Azure DataBricks Accessing Data Lake (Using Access Key)

Introduction:

In this Blog post we

are going to discuss about accessing Azure Data Lake Gen2.

How can we Access the Azure Data Lake

Gen2:

Access can be

done by

Ø

Using

Storage Access key

Ø

Using

Shared access signature (SAS token)

Ø

Using

Service Principal

Ø

Using

Azure Active directory authentication pass-through

Ø

Using

unity catalog

In this post

we are going to discuss about accessing Azure Data Lake Gent 2 by using Access

key.

Authenticate Data Lake with Access Key:

·

Each

storage account comes with 2 access keys

·

Each

access key is 512 bits

·

Access

key gives full access of storage account

·

Conceder

it as super user

·

Key

can be rotated (re-generated)

Access Key Spark configuration:

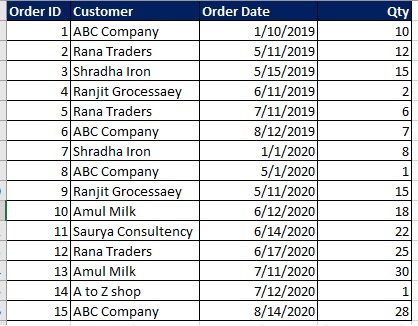

Here we take myschool as Azure Data Lake gen2 and there is a

container within this data lake named bronze. The bronze containers have a csv

file named school.csv

spark.conf.set(

"fs.azure.account.key.myschool.dfs.core.windows.net",

"<512 bit access key in Azure data Lake>")

Microsoft recommended

abfs (azure blob file system) driver protocol to access

abfss://bronze@myschool.dfs.core.windows.net

Note book command:

spark.conf.set(

"fs.azure.account.key.myschool.dfs.core.windows.net",

"<512 bit access key in Azure data Lake>")

dbutils.fs.ls("abfss://bronze@myschool.dfs.core.windows.net")

è

It

gives us the list of files within bronze containers

display(Spark.read.csv("abfss://bronze@myschool.dfs.core.windows.net/school.csv"))

è

It

is going to read and display the school.csv file

Comments

Post a Comment